Assumptions and Limitations

Assumptions

Definition of Self-Service and Communities

For our purposes, self-service and community interactions are defined as: Information that improves customer success and productivity with our products and services (instructional, troubleshooting, recommendations) where user intent is about solving an issue as opposed to purchase, design, or value-added services.

See Glossary of Terms for more definitions used in this project.

Limitations

Given the varying maturity of self-service deployments and resources available, we provide "good," "better," and "best" measurement options.

Every Consortium Member company who worked on this project brought their own handful of indicators - and everyone felt their indicators could be improved. Different business models need different metrics. For example, when looking at clickstream analysis, everyone has different levels of sophistication or a different journey map as to when/where clickstream analysis starts.

Not all unsuccessful self-service or community attempts result in case creation, and not all successful self-service or community engagements represent an avoided case. While we attempt to delineate attempts between self-service, community, and assisted interactions, another perspective to consider is parallel solving while a case is open. We cannot accurately measure all scenarios at scale.

Due to the complexity, the most useful strategy is to trend against yourself. Consider how you can establish a baseline for your organization and measure your progress against it.

Questions That Require Assumptions to Answer

- How many customers with support demand never made it somewhere that we can measure their engagement? (e.g. they started in Google and stayed there)

- What does a successful engagement look like? There are numerous possibilities for a successful pattern of engagement for various personas (break/fix versus goal-oriented tasks versus long-form learning) and from different origins (Google, Direct, Click Navigation vs. Search, In-Product Help).

- Are anonymous users customers? Members experimenting with this report that after adjusting for bots, approximately 90% of hits to their public self-service content comes from external search engines (like Google). Based on clickstream data and surveys, it appears a very large percentage of those hits are from customers who did not take the effort to log into the support portal.

Metrics and Measurement Challenges

- Sessions: We measure by sessions, but not all sessions are equal. We aim to measure sessions that provide value, not just the total quantity of sessions. This may mean figuring out how to remove non-valuable / ineligible sessions from your count.

- Data sources: Measurement tools (Google Analytics versus SEMrush versus Internal measures) may have visibility or measurement parameters that differ.

- Effort: What is a high effort versus low effort visit? It's a subjective measurement that constantly evolves.

- Goals: Measurement goals change based on user persona or use case.

- Bounce Rate: Bounce is not a good qualitative measure because a single-page session can be successful. Bounce calculations can be impacted by event tracking. Better calculations are time on page, scroll percentage, and other contextual measures.

- Article Surveys: Limited value due to low participation (~1.5%). Article feedback survey lacks user context. Are customers rating the article quality or their overall experience regarding their issue?

- Context: Not all metrics are actionable or have a clear 'why'. When self-service fails, did customers not find what they were looking for or not understand the article they read? Often times, we have to drill down on supporting or related metrics to understand the context of the data.

Value of the Knowledge Base

The knowledge base does not possess its own value but is an enabler of many other things that have great value, including:

- The assisted model (improve accuracy of resolution, reduce time to relief, increase time to proficiency/upskilling)

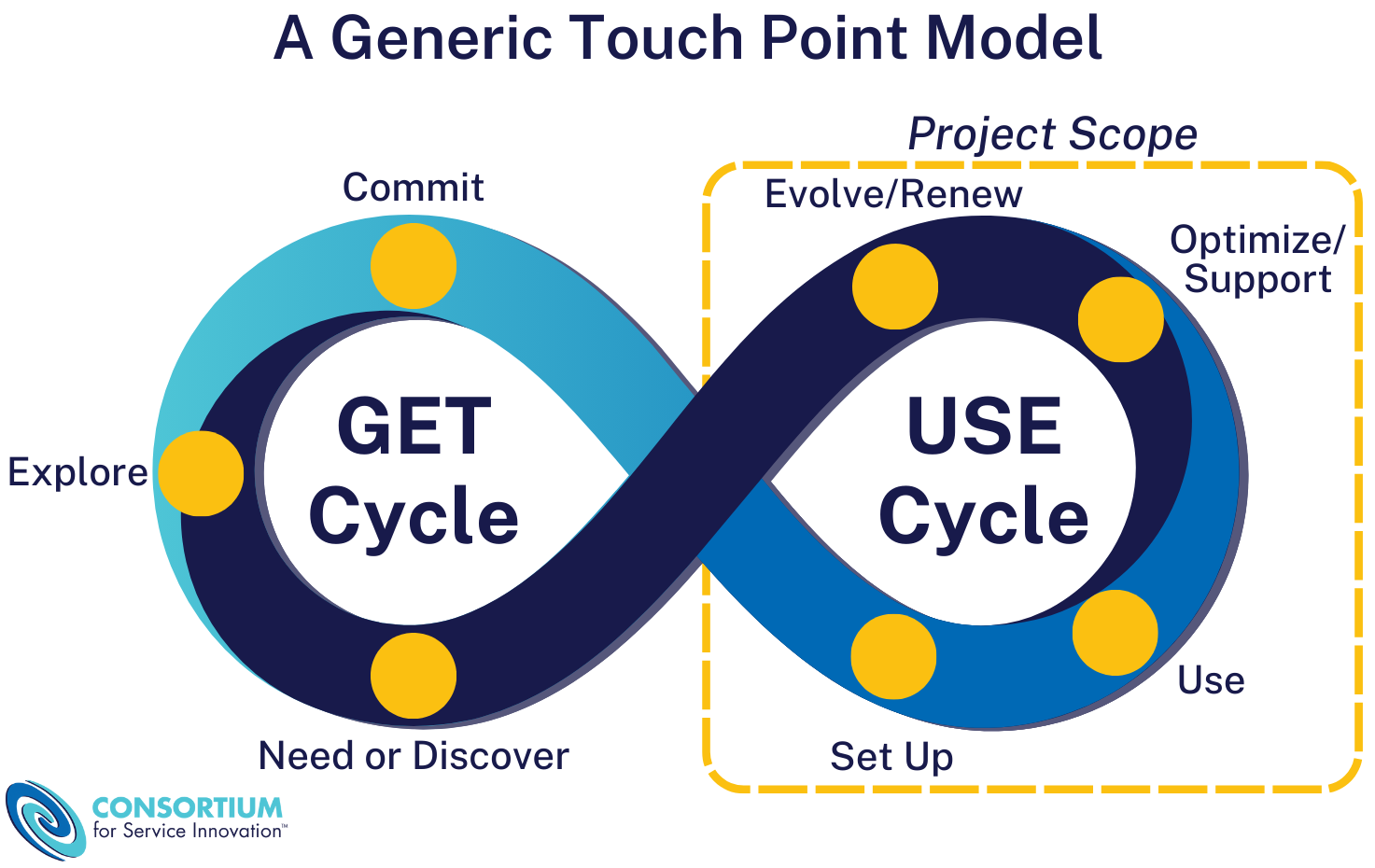

- Evolve Loop activities: mining of trends and patterns to improve products and services

- Self-service engagements (improving customer success and productivity by empowering customers to research and self-solve)

- Lead-gen, cross-sell, up-sell

- Dispatch avoidance (field service, desk side service)

- Improve time to resolve and accuracy of repair actions (field service, desk side support)

- Improve field service capability and efficiency

- Reduce customer effort