Introduction

Introduction

Consortium for Service Innovation Members recognized early on that KCS is a journey, not a destination. Built into the KCS model is a process for continuous learning and improvement, and there are milestones along the way. As with any journey, there are periods of travel and periods of rest. However, if we rest too long or in the wrong place, the initiative loses momentum and the benefits are not sustained.

KCS: The Great Enabler

Members of the Consortium who have been successful with a KCS adoption report that having a good knowledge base built from the experience of resolving customer issues has significant and long-term benefits, including:

- Operational efficiency

- Reduced onboarding time

- Improved employee capability and job satisfaction

- Improved customer experience, success, and loyalty

- Improved speed and consistency of answers delivered

- Improved customer success with self-service

- Organizational learning that drives high-value improvements in:

- Product and service offerings (usability, features, and functionality)

- Processes & policies

In addition to these benefits, we call KCS the "great enabler" because a healthy knowledge base enables the use of emerging digital automation capabilities including:

- Automatic classification of content

- Predictions

- Recommendations

- Optimization

Good news and bad news: KCS thrives on change.

This is good news, since most of us work in environments where the rate of change is accelerating.

The bad news is, if the organization doesn't change its measures at the right points along the journey, KCS will stall. Knowledge workers will not maintain engagement and the benefits will dissipate.

KCS Enables Service Excellence

What do we mean by service excellence? The Consortium Members have defined service excellence as “maximizing customer-realized value from our products and services”. This simple definition is changing the role support plays within an organization. It opens up numerous opportunities beyond just resolving cases or incidents. Responding to requests (incidents or cases) is a one-to-one model. Responding to requests within a KCS implementation is a one-to-many model. It leverages what we learn while resolving requests.

The next question is what do we mean by “realized value”?

The baseline for value realization is a function of customer expectations. There are three key elements to value realization.

- Capability – If customers can accomplish what they expected with our offering, they are realizing value.

- Effort – If customers can accomplish what they expected with the expected amount of effort (in the expected amount of time), they are realizing value.

- Experience – If customers have a pleasant emotional experience while accomplishing what they expected, they are realizing value.

In the technology world, we often focus on these value factors in the order listed. That is, feature and functionality are often the top priority. However, in the hospitality industry, the order is reversed. Restaurants, hotels, and amusement parks (think Disney) focus first on the experience. No matter the order, each of the three elements contributes to a customer’s sense of value, measured against their expectations. As technology companies move from on-premise offerings to software as a service or cloud-based offerings, it requires a more balanced view of the importance of each of the three factors.

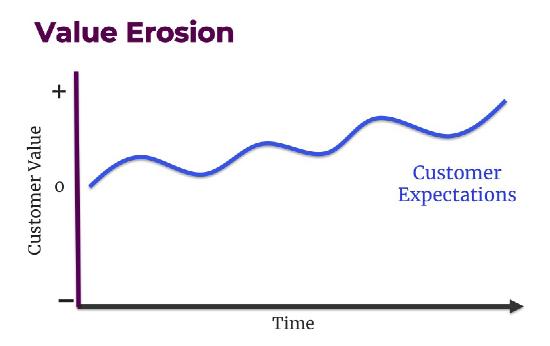

Value is an abstract thing; it is unique to each of us as individuals because it is based on our expectations and it is heavily influenced by our past experiences. This is why the customer expectation line in the model is wavy. Another dynamic about expectations is that the better we are at meeting or exceeding customer expectations, the higher the expectation becomes: expectations are not static. This is why the expectation line goes up over time. And, expectations about our products, services and interactions are not just set by us, they are influenced by the customer’s experiences with others. If other companies our customers interact with are improving the customer’s capability, reducing their effort, and creating a pleasant experience, it raises their expectations of us.

Value Erosion and Value Add

Two key concepts that are fundamental to the Consortium’s work are the Value Erosion and Value Add models. These models address the dynamics of maximizing customer value realization from our products and services.

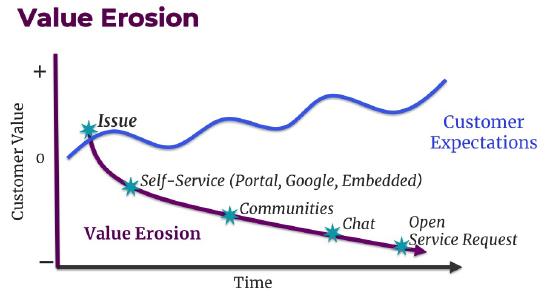

Value erosion happens when a customer encounters an issue. We define an issue as anything that disrupts the customer’s ability to be successful with our products or services. It could be a question about how to do something, a feature not working as expected, or a question about a policy or process. Instead of getting their work done, the customer is now spending time pursuing a resolution to the issue. While the typical starting point for customers in pursuit of a resolution is to search Google, they will try a number of things to find a resolution. If they are unsuccessful in finding a resolution, and the issue is important, the customer may open a case or incident as the last resort. By this time, the value erosion is very deep.

We want to minimize value erosion by facilitating customer success in finding a resolution as early in their pursuit of a resolution as possible.

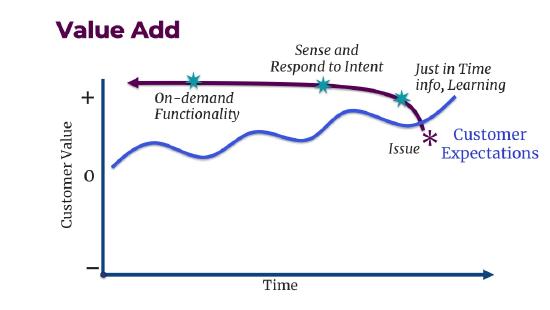

Value add is the other side of the value coin. Can we increase the customer’s capability, reduce their effort, and create a pleasant experience without them experiencing an issue? Customer service and support has lived in the value erosion model for years. The value add opportunity is the new frontier for service and support. It is dependent on knowing a lot about our customers, how they use our offerings, and how to better serve them. It is dependent on the quality and completeness of our data (or knowledge) and our ability to leverage emerging digital automation capabilities.

Our goal is to shift left over time. That is....

How do we measure the reduction of value erosion and the enablement of value add? This paper describes the Consortium Members’ thoughts and experience on answering these questions.

It is a journey that starts with capturing knowledge. Knowledge is what enables us to minimize value erosion and create added value. As we have mentioned, a successful KCS adoption requires a shift from a relatively simple, transaction-based model to a broader, more complex value-based model. As we have explored this new measurement model a few key concepts have emerged.

Key Measurement Concepts

Embracing the definition of service excellence - maximizing customer realized value and looking at support from the customer or requestor's point of view - dramatically expands the scope of our measurement model.

Conversations about measures are difficult, especially when we are trying to measure things we cannot discretely count. Things like value realization or customer success with self-service are difficult to precisely measure. Because there is no one indicator of value realization, we have to infer value realization by looking at multiple indicators, each of which by itself has ambiguity. It is helpful to keep in mind that relevance, consistency, and statistical significance are more important than precision.

As we model and try to quantify customer behavior and experience, we have to deal with buckets of ambiguity.

There are a few concepts that are helpful in dealing with this expanded view:

- Triangulation

- Relevance vs precision

- Consistency vs accuracy

- Confidence interval

We will briefly review each of these concepts.

Triangulation

Triangulation is a technique we have found to be very helpful when trying to assess things that cannot be directly counted. We can infer the realization of value or success with self-service if we look at it from multiple points of view.

A GPS based system (think Google Maps) can tell us our longitude, latitude, and altitude with a high degree of accuracy based on signals from multiple satellites in space, but a signal from one satellite is not sufficient. A GPS system needs signals from at least three satellites in different positions in space in order to determine our location.

The name triangulation is a bit of a misnomer in that it implies three signals; most GPS systems will not provide a location unless they have signals from five to seven satellites in different positions. This improves the accuracy and reliability of the system. We find the same is true when talking about measures for value realization or things we cannot discretely count. We need at least three different perspectives. Five to seven indicators from different points of view is even better and increases our accuracy and confidence.

In the KCS v6 Practices Guide, we describe the use of radar charts as a way to visualize multiple indicators that identify individuals and teams who are creating value in a knowledge-centered environment.

We also find the triangulation model helpful in assessing customer success with self-service. Looking ahead to the Build Proficiency phase of adoption, we discuss the three key indicators of self-service success: customer survey data, clickstream analysis (customer behaviors in using self-service), and variation in case volume normalized to the install base we are supporting. Any one of these indicators on its own is not sufficient to assess the customer experience. If we look at all three, it gives us an indication of customer success.

Relevance vs. Precision?

If I am going on a trip to visit one of our Consortium Members, say Dell Technologies in Hopkinton, MA, I might tell my spouse, “I am going to Boston to visit one of our Members.” This is not precise, but relevant and sufficient. I fly into the Boston airport and I will be in the greater Boston area. If I am talking with a Consortium colleague, I am likely to say “I am going to visit Dell in Hopkinton, MA." More precise, but I don’t have to give the address of the Dell office.

When I get to the Boston airport and rent a car, I have to tell my GPS map program where I am going. Hopkinton as my destination is no longer sufficient; I have to enter the address of the office in order to arrive at the right place. If I were talking with a cartographer, I might provide the most precise representation of my destination with the longitude and latitude of the Dell office: “I am going to latitude 42.194363, longitude -71.543858." However, if I told my spouse the latitude and longitude of my destination, it would be meaningless. It is not relevant, and they would probably take me to the hospital instead of the airport.

How many times have we gotten into endless discussions about measures and their accuracy or precision? Precision is not as important as relevance. Relevance is about being useful for a purpose.

Consistency vs. Accuracy

The trend is more important than the number. As we deal with indicators that have inherent inaccuracies, the trend of an indicator over time can be informative so long as the data collection and inherent inaccuracies are consistent. For example, if we have a data collection capability and a set of assumptions about the number of customer issues being pursued through self-service sessions, and we normalize that to the size of the audience being served (e.g. the install base or licenses sold), the trend of customer issues being pursued can be informative. Because of the assumptions, the number of issues being pursued will not be 100% accurate, nor will the size of the audience. Both are very hard to get precise data on. But if the data collection and assumptions remain consistent, the trend over time will be informative. If we see an increase in the number of issues being pursued in self-service normalized to the size of the audience, we could infer that customers are finding value in self-service. And, if we have data from other perspectives, such as clickstream analysis and/or survey data about the customer’s experience that correlates or reinforces the assumptions about self-service value, it increases our confidence in those assumptions.

Confidence Interval

Do we have enough data to trust the results? Like the other measures discussed here, survey data is imprecise. There are numerous challenges in creating good surveys. Writing good questions in itself is a bit of an art. Dealing with unanticipated interpretations of questions and response bias introduces some uncertainty in the results. The sample size is another important factor in assessing survey data. It is helpful to know the confidence interval or the margin of error associated with the survey results. More information about confidence intervals and sample size as well as a handy tool in assessing the confidence interval can be found with this (or a similar) sample size calculator.