Predictive Customer Engagement

Hosted by PTC, Needham, MA, August 24-26

Notes to add? Request write-access or send notes directly to kmurray@serviceinnovation.org.

- Attendees

- Consortium YouTube Channel

- Twitter: #CSITM

- Consortium Recommended Reading

- LinkedIn Group: Consortium for Service Innovation

Wednesday, August 24 - DAY 1

Welcome and introductions - Melissa George, Consortium

Predictive Policing in Manchester, NH: news clip

Foundational principles of predictive engagement:

- user in control: opt-in (subscribe to notifications)

- timely

- relevant

- appropriate channel

- validated

- cared about

- advice? direction/action: actionable

- expectation setting

- trust

- willingness to try

- experience/history

- multiple data factors

- evolve over time

- personalization: own input into how you're interacting/interacted with

- interaction/input

- cost-benefit

- contextual

- where is the creepy line?

- co-opitition

- why?

Recap of work done around Predictive Customer Engagement - Greg Oxton, Consortium (slides: view, download)

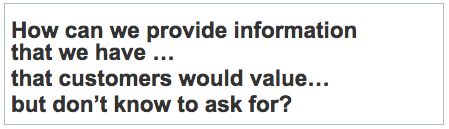

Review the value erosion/value add model: increase capability + reduced effort = added value

double loop predictive model: data collection, associations, analysis/recommendations, presentation

- Shoshona Zuboff: the individual is the unit of measure (video summary of this idea: Executive Summit 2013)

- Doc Searls: the individual is the point of control (book: The Intention Economy)

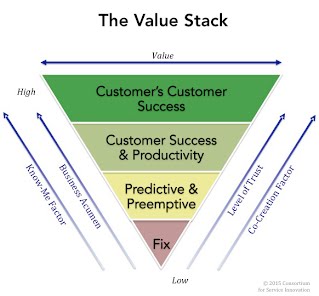

- The Value Stack (one page pdf): Future work: Customer Presence Indicator?

- The Consortium's Master Deck on Value Models (view, download)

Preemptive Support and the Integration of Products and Services - Rex Martin Jr., EMC (slides: view, download)

- speed and ability to personalize and customize views are key

- Tableau as data viz tool for the workbooks (for services)

- MyService360 allows customers to visualize the value of the data

- measures change dramatically when you go predictive - can't even measure page views any more (customers don't need to come find the info because it's been served up to them already, single page may have 15 widgets/15 pieces of content)

- design sessions with customers: 50-70% of RFEs in the environment have to be customer-driven (required to build what customer wants vs. internal wants). Design sessions: Harvest, Process, Develop, Review

- Heard: "Online experience too complicated." Groundwork: architecture + big data technology presents what's actionable.

- Security (on a number of levels) is an issue for customizable views.

- Not "reporting" but interactive, evolving visualization

- Challenges:

- How to leverage and access data?

- How to get people to understand what we're trying to do? (political ownership)

- Presenting the goal as an actionable future reality (it's not a pipe dream)

- Making it iterative and fast: not an "18 month project" but displaying incremental progress

- intelligent swarming + KCS = agile support

Member Experiences: Connectivity is Key - Carl Knerr, Avaya (slides: view, download)

- Fire Marshall instead of fire fighters. Does every house have a fire detector?

- 2011: 88% of initial contacts through the phone

- Mike Runda's 15 steps to getting people to stop calling you on this page (related slides here from Exec Summit 2013: view, download)

- Expert Systemssm : 90% of alarms service requests are auto-resolved, almost instantly, without human intervention (While You Were Sleeping report). 5x faster resolution of remaining SRs because starting with system generated specific alarms

- Configuration Validation Tool requires remote connectivity, connects, runs script, generates human readable report, transfers log and output to Avaya server for access by authorized parties. Reduces resolve time by LOTS

- How far do we go proactively before we start eating away at professional/managed services' value prop?

- Risk Management Support Offer: proactive support through humans, not tooling. Direct customers only, adoption of upgrades & updates went from every 18 months to every 6 weeks

- Simplifying connectivity: MANY steps, with required partner/customer action. Now, playbook for sales, self-service reporting for customers on healthy connectivity

- Optional policy server allows customers to create rules engine around customer/Avaya automated connection

The Internet of Things and KCS: Driving Proactive Services at PTC - Pierre Maraninchi and JC Coynel (slides: view, download)

- Proactive at PTC: acting in anticipation of future needs.

- If PTC engages first, it's proactive. If customer engages first, it's reactive.

- Goal is to make 80% of support proactive by 2020.

- Notification: information that tells you about something that has happened, is happening, or is going to happen

- Recommendation: a suggestion about what should be done (you should/you must...)

- Four (comfortable) initiatives in progress

- Creo: ID and prevent software crashes, map crash signature to solutions. Reusable per unique crash signature.

- Windchill: Recommend cache settings to optimize performance, hard code sophisticated logic. Personalized value per customer.

- 3rd party compatibility: easy ID of incompatibility, hard code sophisticated logic (paid web feature - first time a tool was provided based on entitlement level)

- Smart Article Search: machine-learning-based search engine, map article content to raw customer descriptions (article descriptions/titles), send email with top articles to searcher. Case opening has declined by 5x.

- Logic doesn't happen in the products - raw data gets uploaded to PTC, where logic is run and delivered via support portal

- Most organizations don't pay enough attention to customer context or rich environment statements

- Next up: Connect Products, Leverage Data, Expand from Cases to Recommendations, and Develop Support on Customer Journey

- 2017 Roadmap: expand recommendations with "smart KCS"?

- Recommendations from machine learning: machines can correlate symptoms and solutions, and issue resolutions can be built from experience/data.

- Open discussion:

- Best practices on recommendation management

- Expiration date?

- Best way to support customers with questions/issues on recommendations

- Are recommendations addressed to a user (which one?) or a company?

- How do we define the pipeline? Who implements infrastructure/recommender logic? How to maintain product evolution, how to deal with inaccurate recommendations?

- Customer adoption: facing the chasm

- Data confidentiality

- Customer return on efforts invested on being proactive: are there any defined critical success factors for customer adoption?

- "Customers who follow recommendations get these results. Customers who don't get these."

- TTR for customers who are connected vs not

- Is this a support initiative or a corporate initiative? What messaging is used? (And who is sold on these initiatives?)

- Best practices on recommendation management

Today, reinforcement for: value of co-creation, trust, importance of business acumen, and know-me factor! (Phew!)

Thursday, August 25 - DAY 2

Evolving the Predictive Customer Engagement Model - Greg Oxton, Consortium (slides: view, download)

- Where customers are in the life cycle influences how you interact with those customers.

- Event management as defined by ITIL (Wikipedia)

- DMTF: Distributed Management Task Force creates standards for data transfers between companies

- A LONG conversation about defining dimensions of an event!

Open Space: meeting attendees nominate topics for exploration

- Customer Adoption: How to encourage participation in sharing data in order to get predictive capabilities?

- What does support look like when 80% of what you're delivering is proactive? Who's analyzing the data?

- How to link predictive customer engagement to business outcomes? How can we benchmark the data that comes from the Event Loop? What are success metrics for adoption? Proactive support: free, standard, or premium pricing?

- How to choose where to enable proactive support? What criteria? Who decides?

From May 2016 Team Meeting:

Customers for Life - Wyeth Goodenough, Salesforce.com (slides: view, download)

- Early warning system: implementation health (stickiness number), but more about consumption than value

- customer success = trusted advisor, gets lots of inside info because they're not sales

Avaya's blogs and videos, explaining the value of things like proactive support. (Thanks, Carl!)

- Proactive Support: http://bit.ly/1MU22tc

- Connectivity: http://bit.ly/1OVBScN

- Alarming: http://bit.ly/1Z7yJtW

- SLA Mon: http://bit.ly/1LR6Hty

Friday, August 26 - DAY 3

Measuring customer success with analytics to improve self-service search success and predict customer self-service behavior. - Laurel Poertner, Coveo (slides: view, download)

- Customer Success Managers are trusted advisors at Coveo. Included in top tier, tier two and three are fee/year (first six months free).

- Primary contact for customer and internal Coveo, manages strategic business reviews

- Primary contact for customer and internal Coveo, manages strategic business reviews

- Enablers of Success: Findability, Completeness, Access, Navigation, Marketing

- Split metrics: average time to handle new vs average time to handle known, because the actions you take to impact those metrics are very different. While average TTR will go up in the call center as ratio shifts from known to new, TTR for NEW should stay the same.

- Use triangulation to assess savings

- Definition of self-service success: "The rate that customers indicate they completed their desired transaction and/or found useful information." (how?!)

- Total Visits + Click - Submit = Success(?)

- Are Customer Success Managers KDE's?

- AQI for Recommendations? Who does that? Data Scientist? Needs to know context of the content.

- Feedback on Recommendations/automated info? Where? Who? How?

From web session February 23, 2016: Improving Self-Service Success

- Recording (YouTube)

- Greg Oxton, Consortium: slides (view, download)

- Bill Skeet, Cisco: slides (view, download)

Getting Customers Engaged - Greg Oxton, Consortium (slides: view, download) - starting at slide 41

- BJ Fogg: motivation, ability, trigger (Game Design models)

- www.behaviormodel.org - Captology: overlap of persuasion and computers

- Benefit has to exceed the effort required in order to promote action

- Future work: engagement model for Predictive Customer Engagement? How do we get the customer to do their part?

- Measuring success: of adoption, of quality of recommendations

- Develop a Customer Presence Index/Indicator. Are your customers really engaged in the areas where co-creation is required?

- Engagement assessment and analysis effectiveness could feed into CPI

- Measuring the whole Predictive Customer Engagement double loop

- Customer Success Initiative: next meeting broader scope. Austin in the spring?

Outstanding Questions:

- What do we need to know from the KCS content?

- What do we need to know from people profiles and reputation?

- What do we need to know about interactions?

- Data elements/objects: "Recommendation" object required? What are those attributes? Severity indicator, risk assessment? Will see sample PTC Salesforce object

- Events come in as an object (case, system-generated, or action detected) but what comes out is a recommendation (not another event)

- Want to capture success rate, adoption rate of recommendations over time

- Human-to-human vs. human-to-machine