Technique 5.10: Content Health Indicators

As the organization gets started with KCS adoption, the KCS Coach plays a major role in the quality of the knowledge base content by reviewing the articles created by the KCS Candidates who do not yet have the permissions to put articles in a Validated state. The Coach's goal is to support the KCS Candidates in learning to do the Solve Loop, adhering to the content standard, and using the structured problem solving process.

KCS proposes a competency or licensing program that uses the Content Standard Checklist (previously Article Quality Index or AQI) and the Process Adherence Review or PAR (previously known as Process Integration Indicators or PII) to assess the knowledge worker's ability to consistently create articles that align to the content standard and follow the KCS workflow. By earning a license, knowledge workers are recognized for their KCS understanding and capability, thereby earning rights and privileges in the system.

The licensing program ensures that people understand the KCS workflow and the content standard. This program contributes to the level of quality and consistency of the articles in the knowledge base.

While there are many checks and balances in the KCS methodology to ensure quality articles, there are five key elements that contribute to article quality:

- A content standard that defines the organization's requirements for good articles

- Content Standard Checklist (below), which determines an article's alignment with the content standard

- Process Adherence Review (PAR) - are we following the KCS workflow?

- The licensing and coaching model

- A broad and balanced performance assessment model.

The Content Standard Checklist

In previous iterations of the KCS methodology, we have referred to this as the Article Quality Index. The idea was that especially for large and distributed teams, organizations must have consistent quality metrics for rating the article quality and performance of those contributing. In practice, this meant that organizations put all of their focus on the number generated as an indication of the quality of their knowledge base.

In reality, this is meant to be a coaching tool to help knowledge workers understand and remember how we are aligning our articles with the content standard, and perhaps to help the organization have a broad picture of how well knowledge workers are understanding and applying that content standard. Because we are addressing technical accuracy with the practice of "reuse is review," this is not meant to serve as a technical review or to look at other aspects of article value or quality.

The Content Standard Checklist can be customized and evolve over time, and should be consistent with the content standard for a "good article," quantifiable to facilitate reporting, and shared with both management and the individual (as part of a conversation about understanding and behavior - not about the number). To begin, we suggest these basic checks in the form of yes/no questions:

- Is the article Unique? - not a duplicate article, no other article with same content whose create date preceded this articles created date (this is a critical part of the Content Standard Checklist)

- Is the article Complete? - complete problem/environment/cause/resolution description and types

- Is the Content Clear? - statements are complete thoughts, not sentences (as appropriate)

- Does the Title Relate to Article? - title contains description of main environment, and main issue (cause if available)

- Are the Links Valid? - hyperlinks are persistently available to the intended future audience

- Is the Metadata Correct? - metadata set appropriately: article state, audience, type or other key metadata defined in the content standard

We capture the answers to these questions in an Content Standard Checklist spreadsheet. In the example below, we review a handful of articles that each knowledge worker has linked or created in a specified period of time, and tally the number of "yes" answers in each column. The resulting percentage reflects the frequency with which a knowledge worker (or group of knowledge workers) followed the content standard while interacting with those articles.

While we can use this as an indicator of the general health of the articles in the knowledge base, it is best used as an indicator of the understanding of the KCS content standard. Tangible, quantified information like this improves the quality of feedback we can provide to individual knowledge workers to enhance skills development and drive article health.

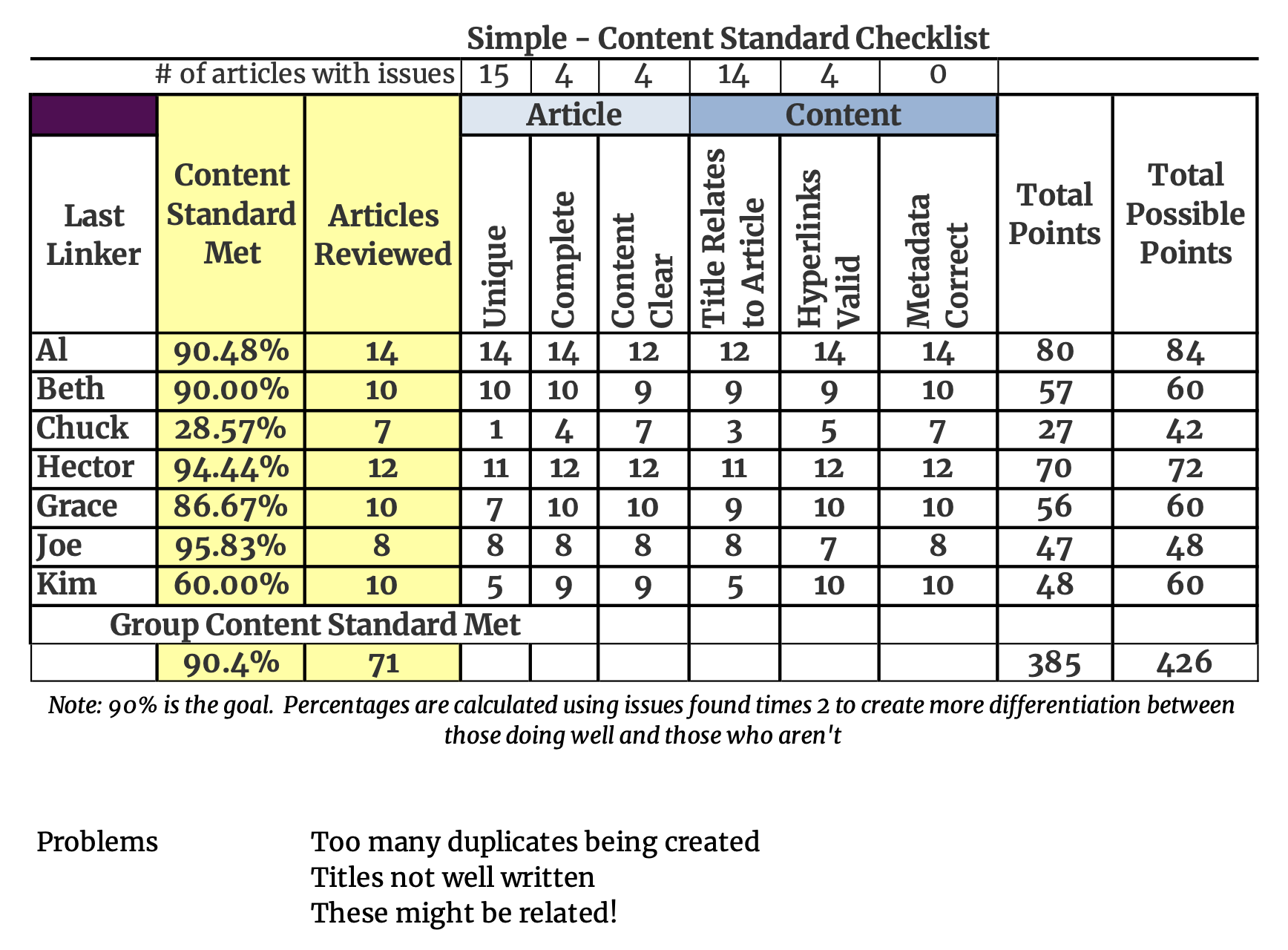

Start simple. Here is an example of an Content Standard Checklist spreadsheet focused on the big six items:

Percentages are calculated using (total possible points minus total points times 2) divided by total possible points. In Excel, the formula for Al's Content Standard Checklist % = 1-(2*(L5-K5))/L5

In both example spreadsheets here, the errors carry a weight of 2. This is done to enable the percentages to better reflect differentiation between those doing well and those who need some help. In these examples, anyone with an Content Standard Checklist percentage below 90 should get some attention from a Coach. If they are consistently below 80, they are at risk of losing their KCS license. It is important to monitor trends over time on the Content Standard Checklist percentages for both teams and individuals.

This matrix can be customized to suit an organization's requirements. A consumer product may need more emphasis on usability and formatting compared to a highly technical audience. But: don't over-engineer it! Start simple and evolve it based on experience.

Over time, as our KCS adoption matures and the organization gets good at the basics, we might add some additional or more granular areas of focus. We find that the content standard is 70-80% common across organizations and 20-30% tailored to a specific organization. Some of the factors in the criteria will be influenced by the knowledge management technology being used in the environment. The content standard sets the criteria for the checklist, and must be tailored to the environment and the tools being used.

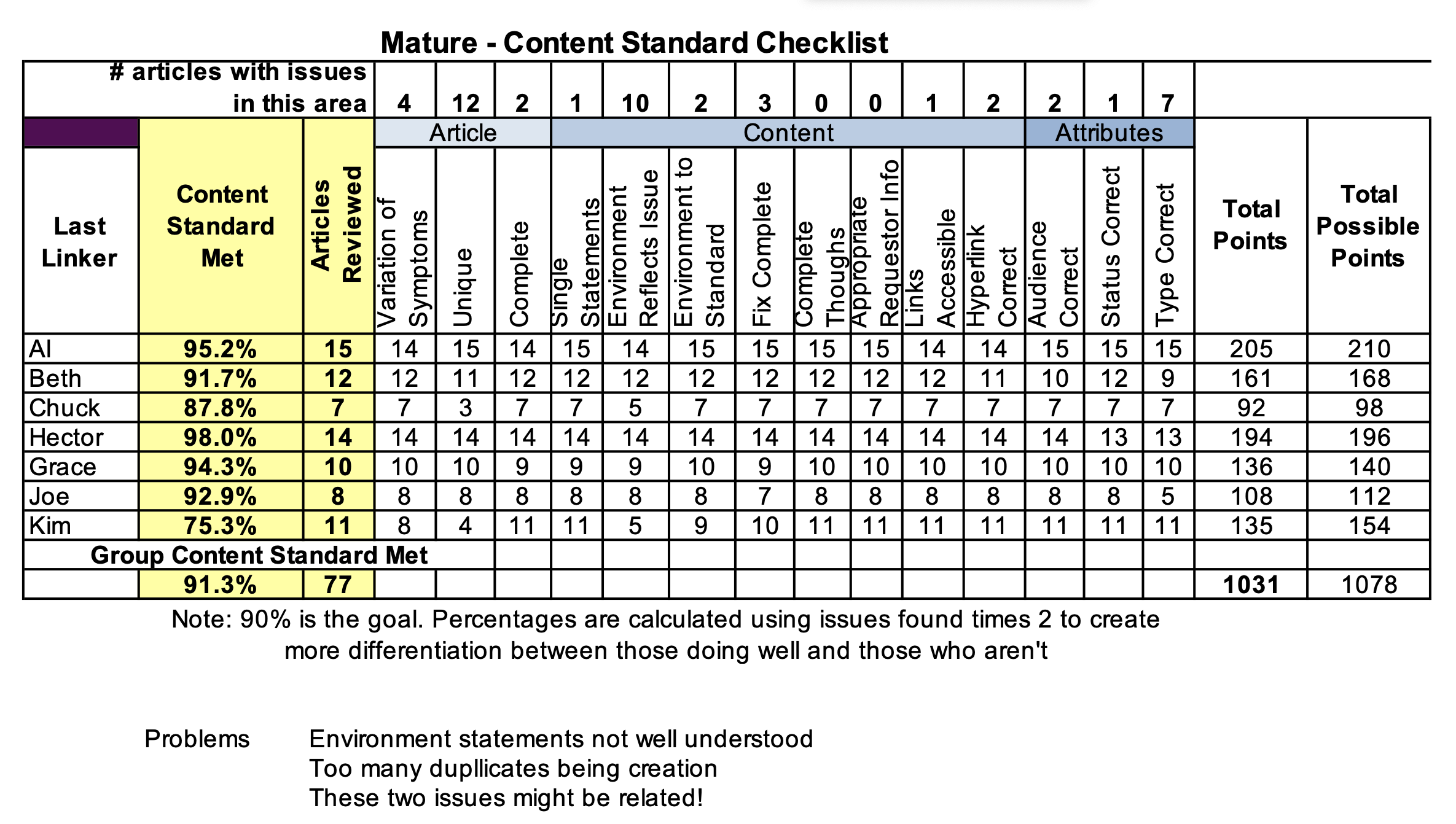

Following is a sample Content Standard Checklist spreadsheet in a mature KCS environment, for example purposes only.

Some key ideas to note in the mature example (for more details, see Practice 7: Performance Assessment):

- Compare the number of articles reviewed for each creator. A legitimate sample size is important. Creators Chuck and Ed may have too few articles to be fairly weighed.

- In the first row (# of articles with issues in this area), organizational performance is visible. This is a great area to focus on with groups of KCS Coaches. In this example, many articles are duplicates, incomplete, or unusable. This result could mean knowledge workers need more training on searching and documenting content.

- One contributor, Kim, is a prolific contributor, but also leads in the top three categories of problems. Attention from a KCS Coach is merited.

Evolve the Content Standard Checklist items based on experience. As an organization matures in its use and confidence in KCS, it becomes easier to pay attention to more granular or refined content considerations like versioning, global distribution, use of multimedia, and measuring team-based contribution in addition to individual contribution.

Many mature organizations develop a weighting system for this more complex criterion as the violations do not all have the same impact. For example, a duplicate article is a more serious error than an article that is too wordy. (See the Progress Software case study for an example in practice.) Again, the criteria and weighting should be done based on the needs of the organization and should be considered only after the organization has had some experience with the Content Standard Checklist process.

Knowledge Sampling for the Content Standard Checklist

To complete a Content Standard Checklist spreadsheet, a group of qualified reviewers (usually the KCS Coaches) participate in regular knowledge sampling of articles from the knowledge base. While the articles are selected randomly (and assigned randomly to reviewers, so coaches are not often evaluating their coachee's articles), it is important to be sure to sample articles from each individual.

Here is a typical process:

- Develop a Content Standard Checklist and criteria

- Evaluate a sample of articles

- Calculate the content standard percentage met and develop summary reports

- Provide regular feedback to the knowledge workers on possible content standard areas of focus with comments from the Coach who did the evaluation

- Provide periodic feedback to leadership

The Content Standard Checklist should be used for learning and growth of the knowledge workers. During rollout and training, the frequency of this monitoring should be weekly. It will take more time due to the high number of KCS Candidates (people learning KCS). Once the organization has matured, the frequency is typically monthly and should not consume more than a few hours of time per month per reviewer. Note that what the organization focuses on around the content standard should change over time. The elements for assessment at the beginning of a KCS adoption will be more basic than those things the organization will focus on two years into the knowledge journey.

There are a number of considerations for monitoring knowledge health in organizations. With the Content Standard Checklist, we are concerned with an article's compliance with the content standard. Other areas to consider include the Process Adherence Review (PAR), case documentation and handling, customer interaction, and technical accuracy. Organizations have various ways to monitor the health and quality of these important elements of the process.

As organizations reflect on their processes in the Evolve Loop, they are identifying key monitoring elements and ways to integrate monitoring across the processes. One element that is emerging as critical to monitor on a regular basis is link accuracy. This is part of the PAR, which is also done using a sampling technique and can be integrated into the Content Standard Checklist process. Assessing link accuracy also shows up in the New vs. Known Analysis.

Link rates (percentage of cases closed with an article linked) and link accuracy (the article resolves the issue raised in the case) are the key enabling elements for identifying the top issues that are driving support cost (case volume) and user disruption. In order to provide credible and actionable input to product management and development about the highest impact issues, we need to have link rates that exceed 60% and link accuracy that exceeds 90%. Link accuracy is more important than link rate.

Reviewing Articles Through Use

The KCS Principle of demand driven and the Core Concept of collective ownership combine to create efficiency. The idea that people feel a sense of responsibility for the quality of the articles they interact with is critical. The cost and delay of the alternative: that someone else owns article quality and that it is someone else's responsibility to review it, is prohibitive. This sense of collective responsibility is reinforced through coaching, the competency/licensing program, communications from the leaders, the performance assessment program, and the recognition programs. The new hero in the organization is the person who creates value through their contribution to the knowledge base, not the person who knows the most and has the longest line outside their cube.

Feedback to the Knowledge Worker

Knowledge workers must have visibility to their Content Standard Checklist evaluations so they understand where to self-correct. Again, this is as part of a conversation about understanding and behavior - not about a percentage or number. Content Standard Checklist results are also a key tool for the Coaches as it helps them identify opportunities for learning and growth.

Assessing the Value of Articles

As we move through the KCS adoption phases, the knowledge base will grow. We will want a way to assess the value of the articles in the knowledge base. There are three perspectives to keep in mind when assessing the value of articles: frequency of reuse, frequency of reference and the value of the collection of articles. The reuse frequency is a strong indicator of the value of an individual article and is fairly easy to assess. The frequency of reference is equally important and is much harder to assess. The value of the collection of articles has to be looked at from a systemic point of view.

Article Value Based on Reuse

The value of any particular article can be measured by the number of times it is used to resolve an issue. If we are linking articles to incidents, we can easily calculate the reuse count. As we expand into the Build Proficiency phase of the KCS transformation, measuring the reuse of articles becomes much more difficult because customers using the article through self-service do not link articles to incidents nor do they show much interest in answering the oft-asked question, "Was this article helpful?" To assess the value of individual articles in a self-service model, we have to infer value based on a number of factors.

A few Consortium Members have developed article value calculators that take into account the following:

- Page views

- Internal links

- Customer feedback (Member experience indicates that customers provide feedback on a tiny percent of articles viewed: 1-2%)

Article Value Based on Reference

The second perspective is the value of the collection of articles. Even though a specific article may not be the resolution to the issue, an article about a similar issue may provide some insight or remind us of an approach or diagnostic technique that we know but had not thought about. The frequency of reference is extremely valuable and hard to measure.

The Value of the Collection of Articles

The indicators for the value of the collection of content can be calculated based on the rate of customers' use and success with self-service. More specifically, support organizations often look at the subset of the self-service success rate that represents issues for which the customer would have opened an incident had they not found an answer through self-service. This is often referred to with the unfortunate vocabulary of "call avoidance" or "case deflection." This avoidance or deflection view represents a vendor-centric view of support, not a customer-centric view. A customer-centric view does not avoid or deflect customers; it promotes customer success through the path of least resistance and greatest success - for the customer!

How Good is Good Enough?

One of the things we learned from W. Edwards Deming, the father of the quality revolution, is that quality is assessed against a standard or criteria. Quality is not a standalone, universal thing; it is specific to a purpose. In order to manage the quality of our output, we have to know the criteria for what is acceptable and what is not. In the KCS methodology, the quality criteria for knowledge articles is defined in the content standard. However, not all knowledge articles are equal in their importance or purpose. Most organizations deal with different types of knowledge, and not all types of articles have the same criteria for quality. For example, some knowledge articles capture the experience of people getting their work done (where we have a high tolerance for variability and interpretation), while other types of articles describe company policies or regulatory requirements imposed by law for which we have no tolerance for variability or interpretation.

How good is good enough? Well, it depends on the type of information we are dealing with. By identifying in very broad categories the different types of information and their related compliance requirements, we can define both the criteria for a quality article and the governance we need for each type of article. A word of caution here: we do not want to over-engineer the number of article types. We want to start with the minimum, which is often just two: experience-based and compliance-based content. Then adjust the article types and criteria based on our experience. Each organization that adopts KCS must define what is good enough for their various audiences and the types of knowledge they deal with. Not all knowledge articles will have the same quality requirements.

To understand article quality issues better, the Consortium conducted a survey of its Members' customers. The survey participants were approximately 67% large enterprises (highly complex business production environments of over 300 users) and 27% small to medium businesses (business production environments less than 300 users) from the Americas, Europe, Middle East, and Africa. The remaining 6% were consumers.

This survey assessed customer needs and quality criteria with respect to web-delivered KCS articles providing technical knowledge. This KCS article content could be in the form of known problems, technical updates, or other knowledge base articles. Almost all of the respondents were already comfortable using web self-help, so they may be considered advanced users. Based on experience, however, we believe the results can be extrapolated to reflect knowledge base content as a whole.

Customer response to the survey indicates articles need to be good enough be findable and usable, or what we call "sufficient to solve."

Getting the Basics Right

To begin with, we examined the basic content requirement—the material that must be included in the KCS article. Respondents chose the following, mostly in the category of "accuracy," as "very important." Responses are listed in priority order:

- Technically accurate and relevant

- Problem and solution description

- Cause of problem

- Complete information

- Quickly found

- Clarity of content

- Valid hyperlinks

- Configuration information

- Vendor's sense of confidence in the answer

Considered "somewhat important," mostly in the category of "editing and format," were:

- Compound vs. single thoughts

- Complete sentences vs. short statements

- Date created

- Correct spelling

- Grammar

- Last modified

- No duplication of information

- Frequency of usage

- Punctuation

Of "least importance," perhaps not surprising in a technical audience, were the attributes:

- Legal disclosures

- Correct trademarks

- Date last used

Impact on Company Image

Most respondents considered editorial format somewhat important. Since the process involved in achieving editorial perfection can be time-consuming and delay access to information, we decided to assess the impact on corporate image of publishing KCS article information at various levels of editorial quality. The results were revealing. The majority of respondents:

- Disagreed with or were neutral to the statement: "I have a lesser image of a company that withholds support information access in order to technically validate it." (In other words, the majority of respondents did not fault a company for withholding information that was not technically validated.)

- Agreed with the statement: " I have a lesser image of a company that withholds support information access in order to achieve editorial perfection."

- Agreed with the statement: "To gain knowledge faster, I would like an option to select to see support information that has not been fully validated."

- Agreed with the statement: "To gain knowledge faster, I would be willing to take responsibility for using any of the incomplete information should there be mistakes." Note: To mitigate risk from sharing this knowledge, many support organizations require customers to accept a disclosure agreement before seeing the KCS article.

- Would have a higher or at least the same opinion when asked: "If the support information were marked as being in draft format, what opinion would you have of a company that shared everything they know, even if it had editorial mistakes?"

Time/Value Tradeoff: KCS Recommendations

From this survey feedback coupled with other experience implementing KCS, the Consortium feels confident recommending that organizations invest in content speed and accuracy over presentation and format. We should strive for timely and accurate knowledge, ensure we are investing appropriately in training, have a good balance of competencies, develop a licensing model (see the roles section in Practice 7, Performance Assessment), and follow the recommendations for maintaining just-in-time KCS article quality through a sampling process and the creation of the Content Standard Checklist.

When it comes to information completeness and degree of validation, organizations must individually assess the risk-benefit tradeoff of sharing information early. The Consortium's findings should not be used as a substitute for asking customers about their needs in this area. In our experience, the just-in-time information model has become increasingly accepted as the business community has embraced open source, monthly and quarterly software releases, and extended and open beta-testing programs. Appropriate disclaimers, click-to-acknowledge interfaces, and a clear indication of KCS article status (confidence) are all ways to make the KCS article visible earlier and let the customer determine their own risk profile for the situation.